Apple’s announcement was met with mixed reactions over privacy concerns.

Earlier this week, Doha News reported on Apple’s plans to introduce a child abuse detection system in its iCloud feature.

The announcement was met with some mixed reactions, which have increased in the past few days.

On one hand, some are pleased with Apple’s efforts to prevent predators from accessing Child Sexual Abuse Material (CSAM). However, others are worried that such systems open the door for privacy violations worldwide, particularly in countries with limited privacy protection.

Both groups have practical concerns, and it’s important to question companies as they implement features that could put user privacy at risk.

To understand just what this move entails and whether concerns are legitimate, we will take a deep dive into Apple’s CSAM prevention system to see if Apple can safely implement such features.

How does the scanning system work?

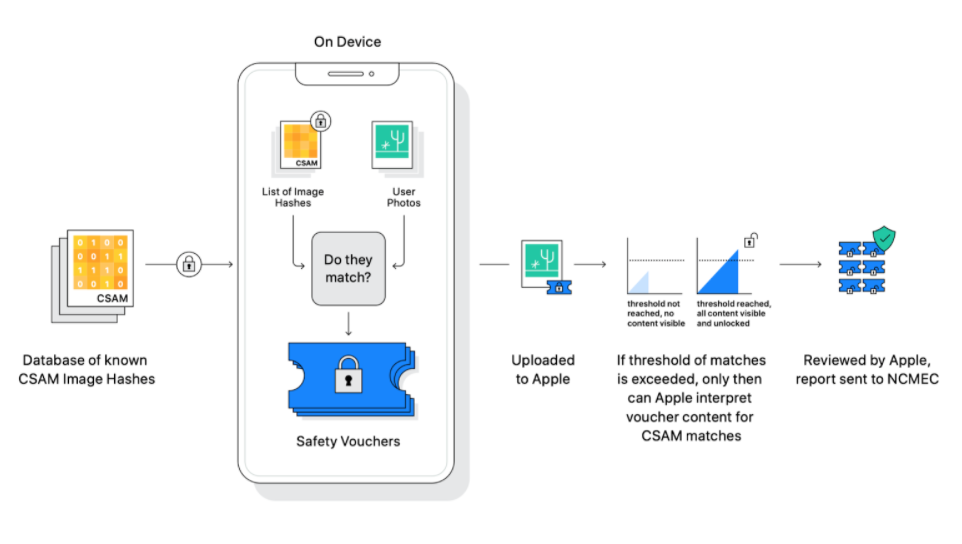

Apple’s system does not work by scanning individual photos on a user’s iPhone to look for CSAM. Instead, Apple devices will include a list of hashes of the child explicit content.

A hash is a cryptographic code that represents an image without containing any of its content. Think of it as a one-way street where an image can be converted into a hash, but a hash can not be reversed back into an image. Identical photos will have the same hash code.

Read also: Apple tackles child abuse with detection system for iCloud feature

So instead of scanning a user’s photos directly, Apple will generate hashes of photo libraries. These hashes cannot reveal the original image. The system then compares the generated hashes to a known list of CSAM hashes to look for matches. As a result, the system can identify material without ever scanning any photos.

If a certain threshold of matches is met, the user’s account is flagged to Apple, which then conducts a manual review of the identified photos. Apple says that requiring several matches as a prerequisite is intentional as it helps avoid false flagging.

Upon manual review of the photos, Apple will report the user to the National Center for Missing & Exploited Children (NCMEC).

Apple believes that the safeguards it has implemented should alleviate any concerns from users, though a few remain.

Can the system incorrectly flag users?

Firstly, a threshold of CSAM, rather than just one, must be detected on the user’s device. When asked by TechCrunch why a threshold needs to exist, Apple’s Head of Privacy Erik Neuenschwander said it’s necessary to reduce the likelihood of false matches.

Additionally, a human review step is in place to account for any scenarios where the system has incorrectly matched enough photos to meet the threshold.

As a result, Apple claims that its system is incredibly accurate and that the possibility of a false match is “less than one in one trillion per year”.

Is my privacy at risk?

Apple repeatedly emphasised that its CSAM detection is “designed with user privacy in mind”. However, this has done little to ease the worries of some of its privacy-conscious users.

Matthew D. Green, a cryptography professor at Johns Hopkins University, criticised Apple’s move and said he’s considering switching to an Android phone to escape this system.

Even supporters of the new detection features have referred to it as a “slippery slope”.

The concern is that once Apple implements scanning of CSAM, it is theoretically possible to expand the system to identify other types of images. In particular, the fear is that governments may force Apple to identify pictures that can suppress journalism and freedom of speech.

Neuenschwander addressed these concerns in his TechCrunch interview, emphasising the numerous protections in place to prevent abuse of its system.

Read also: Walk and chill: Two air-conditioned parks to open in Qatar

Firstly, he said that the list of forbidden hash numbers is universal for all users and is built into the operating systems, so governments can’t demand any remote changes to be implemented instantly.

Secondly, since the system relies on multiple matches to meet a threshold, governments cannot use it to detect the presence of an individual photo.

Finally, since a human review step is required, it will ensure that Apple does not report a user unless it has a collection of CSAM.

It’s clear that Apple has put a lot of thought into its CSAM prevention mechanism and that the company anticipated such backlash.

Can I opt out?

Users backing their photos to iCloud Photo Library cannot opt out of such a system as allowing this would render the detection process useless.

However, you can simply disable photo backup to iCloud to opt out. This will allow users to save photos to their iPhone, though they will lose cloud backup functionality.

Writing for The New York Times, Brian X. Chen explained that instances like this serve as a reminder that we do not own our digital data anymore. He said that he uses a hybrid approach for photo backup to not depend on a single cloud provider. Even if you don’t have any issues with Apple’s CSAM system, it is surely a reminder that storing our photos in only one provider’s system comes with consequences.

What else is Apple doing to combat CSAM?

Apple announced two other changes to combat the spread of CSAM online.

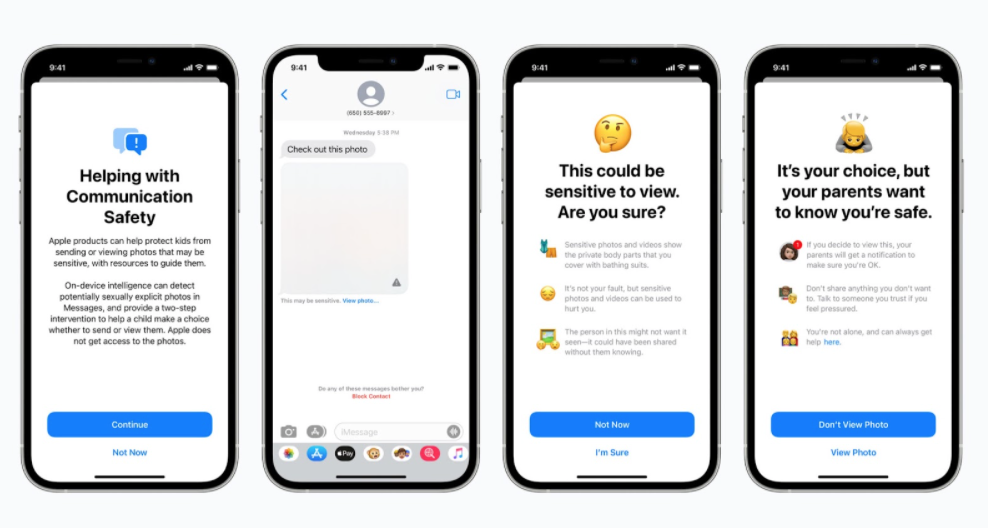

New features in the Messages app will warn children using on-device machine learning if they’re about to send or view a sexually explicit picture.

Read also: How private is your private data?

Another change occurs in Siri and Search, where Apple will help children report sexual violations and warn adults searching for such content.

Should Apple be doing any of this in the first place?

Under the law, Apple is not required to search for CSAM, though it is necessary to report it if it is detected.

Most tech companies already have systems in place to identify such material. In 2020, Facebook reported 20 million instances of CSAM to relevant authorities, which is over 50,000 cases a day. In contrast, Apple only reported 200 incidents in the same year.

Such figures highlight Apple’s shortcomings in preventing the creation and spread of CSAM, prompting the company to take action. Apple said that it waited until a privacy-friendly solution was technically possible before building this system.

As these features reignite the privacy vs security debate, we would like to hear your opinion on it. Should Apple detect and report CSAM, or will such a change inevitably compromise our privacy? Let us know in the comments.