With technology integrating into every aspect of our lives, it’s essential to reflect on the downsides that come along with it.

When Covid-19 forced the world into a lockdown last year, people all over the world were forced to shift their work and lives remotely. Surprisingly, the transition from real-life to digital was pretty seamless. Meetings turned into Zoom calls, conversations turned into WhatsApp chats, and sports was transformed to home workouts sessions.

It turns out that most of our lives existed digitally anyway; the pandemic just highlighted how ready we were for the transformation. So as we race further into a high-tech future, let’s take this opportunity to examine when tech can go wrong.

This week, we gathered the top three most dystopian technologies that already exist to give you something to think about.

Deep-fakes

AI is expected to enhance our lives significantly, but it also creates the possibility of a lot of dystopian technology. One example that already exists is deepfake technology.

Deep-fakes are videos where the original person recorded is replaced by someone else. For example, someone can record a video of themselves using their phone then use AI to edit the video, so it appears a public figure was the person in the video.

Such technology is already in use today to create fake news, celebrity adult videos, and more.

While algorithms have been developed that can detect deep-fakes, no software can accurately detect deepfakes 100% of the time. Additionally, if such software existed, malicious developers can update the technology used in deep-fakes to circumvent detection.

Deep-fakes place doubts on the authenticity of videos that are shared online. As the technology gets better, we may see incredibly realistic footage online that’s been fully modified to depict a fake reality. As a result, we may live in a future where someone can share a video online, and we will have no way of knowing if it ever really happened.

Read also: Spyware and censorship: How governments control what you see online

Genetic Modification (GMO)

Genomics – the science of genes – has launched many life-saving industries around the world. One industry that has taken off as a result of genomics is genetic modification (GMO). Notably, CRISPR gene editing, published in 2012, allowed for cheap and easy genetic modification of living organisms.

Over the past decade, GMO grew from a small discovery to one of the most significant biological advancements we’ve ever witnessed. Wikipedia recognised CRISPR gene editing as the 2015 Science Breakthrough of the Year.

GMO is currently used in the medical field to help save lives. For example, it’s used by scientists to modify bacterias into life-saving drugs. In 2017, scientists edited human embryos to prevent a fatal disease, raising awareness of the benefits of such technology.

It’s not all sunshine and rainbows, though. Genetic modification opens up the door for a highly dystopian future. In fact, it’s being used to create such a future right now.

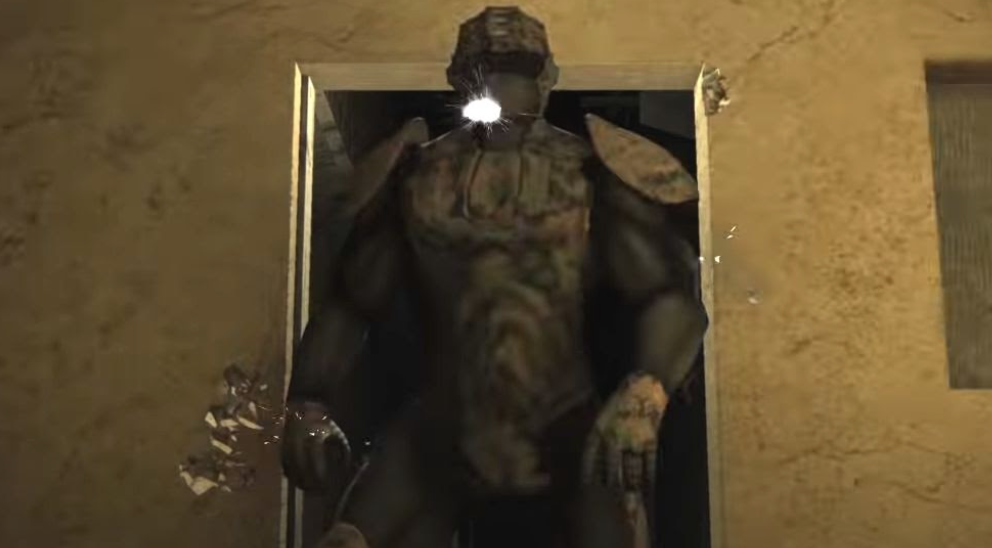

Governments around the world have expressed both concern and interest in using GMO in their militaries. The goal is to modify a soldier’s genes, turning them into what’s been described as a “super soldier”.

In 2014, Barack Obama, former President of the US, announced a tech initiative to journalists where he jokingly described it as the US “building an Iron Man”.

Three years later, President of Russia Vladimir Putin warned that a super soldier’s development is “worse than a nuclear bomb”. He went on to explain that such a person “can be a genius mathematician, a brilliant musician or a soldier, a man who can fight without fear, compassion, regret or pain.”

The BBC reported on accusations made by a former US official, alleging that China is developing such technology for its military too.

Elon Musk, CEO of Tesla, co-founded Neuralink in 2016. While the company isn’t directly involved in modifying genes, it’s developing brain implants that can bring the power of AI to the human mind. A year later, he warned that humanity must merge with machines to stay relevant in an AI future.

The dangers of GMO aren’t just limited to malicious intents. Many fear that scientists working behind closed doors can accidentally inflict large-scale societal harm. In 2016, GMO was classified by US intelligence as a weapon of mass destruction. Theoretically, an error made when modifying a gene could unintentionally wipe out our resistance to common diseases such as the flu, posing an existential risk to humanity.

In fact, throughout 2021, both the Trump and Biden administrations have raised concerns over the possibility of Covid-19 originating in a Wuhan lab, with US President Joe Biden urging the intelligence community to discover the origin of the virus. Whether or not the virus was artificial, the fact that it hasn’t been ruled out yet signifies the potential risks of such technology.

Permanent digital traces

Digital traces are created by the devices, apps and websites that we use every day. If you posted a status on Facebook, sent a message on WhatsApp, and recorded a run five years ago, your data from all three activities probably still exists on a server somewhere.

Our data is collected faster than we already know and stored for longer than we can remember them.

Read also: Is there a vaccine for Whatsapp’s ‘infodemic’

One of the risks posed by permanent digital traces is the destruction of privacy and security in modern society.

AOL, one of the most prominent search engines in the early 21st century, released anonymised user searches to the public in 2006.

While AOL did not include the direct identities of the users, the searches themselves contained enough data to identify some of the users. Through such analysis, The New York Times was able to discover the users behind various searches.

While many digital leaks have taken place since 2006, AOL’s example highlights the power companies have over users and how a few bad decisions could expose millions of users worldwide.

Follow Doha News on Twitter, Instagram, Facebook and Youtube